AI: Images to 3D Models

What are the possibilities of 3D modelling with AI-boosted imagery?...

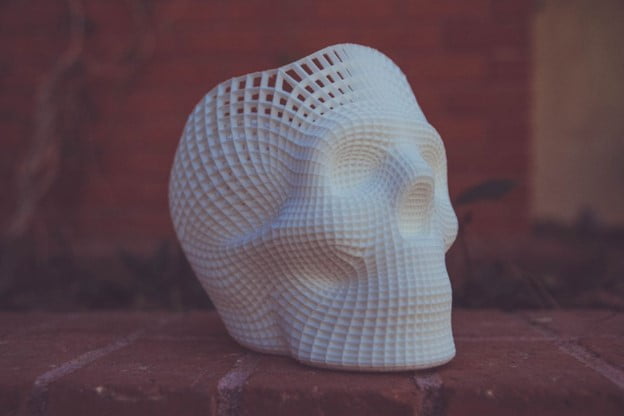

AI is regularly being used to create 3D models from images. Just search YouTube for “3D models AI” videos and the results will be endless of YouTubers showing how they’ve transformed an image into a 3D model with the use of AI.

At Gemmo, we’ve worked with a few clients to develop images into 3D models as well. However, what are the differences between our methods and others?

- Assisting clients from beginning to end with their AI needs and support.

- Reaching their objectives and business goals with the use of AI.

- Communicating and explaining problems and solutions in a way that is understandable, achievable, and sensible.

Current Market Use – Personal Users

While 3D model end products on YouTube may look super cool and sleek, a lot of work goes into creating the AI behind them. Usually, these videos are for personal use by people who have a knack for computer technology and have a creative mind to build such models. These videos focus on DIY.

However, here at Gemmo, we help businesses build AI for commercial use. Businesses need a team that can help them with all their AI needs, especially when they know very little about the technology. Thus, this article will showcase the work we do to help our clients turn their images into 3D models.

The Basics for Commercial Use

We start with the basics first, as it is always important to know your business goals, objectives, risks, and output solutions. One of the most common business goals is to map industrial indoor environments by creating 3D models that specifically use 360° photography.

In layman’s terms, the goal could be to create Virtual Tours and Virtual Staging, which would help estate agencies arrange viewings during COVID and/or when the rooms or buildings are not yet accessible due to other reasons. Prior to using AI, a common solution would be to use laser scanners to create the Virtual Tour or Virtual Staging images.

The laser scanner method has very good depth, but we soon discovered it was very poor at imagery. Thus, the need to improve image quality would allow our clients a solution in order to compete with other companies within their market, and also give them a competitive advantage.

When turning images into 3D models, some common challenges in achieving better image quality are:

- Producing depth images from 360° photography that are usable for commercial purposes.

- Removing distortions, artefacts, and the various types of noise in the depth image.

- Increasing the resolution of the depth images.

Your AI Needs – Images to 3D Models – Risk Assessment

As with every project, businesses must be aware of the risks associated with such an undertaking. Many risks are associated with data, especially if it is disorganised.

Also, in-house artificially created datasets give poor approximations and poor results when compared to real-world data. Real-world data of images to 3D models usually comes with more details, textures, lighting, etc. than in-house data. This is a risk that we are aware of.

Secondly, organising data, what material the data is collected on, and how it is collected, are key elements to beginning any AI project. Perhaps, even more so, the datasets given at the beginning of a project sometimes do not provide the expected results. Subsequently, this can cause delays in the commercialisation of the product. Thus, the quality of the input data, maps, and images is very important.

1. Create Step-by-Step Guides for All in-house Manual Tasks to Prepare for AI.

Other risks tend to derive from tasks completed manually in-house over long periods of time, and those same tasks now need a way to be optimised and available for market use. Consequently, when tasks are done manually in-house, sometimes key network parameters are forgotten or not noted at the beginning of the development stage.

They are not shared on paper nor reiterated; which means optimal value sets might be missing or might be discovered further into the process. Thus, it is important to have detailed step-by-step procedures from imagery to 3D models in order to make the process more seamless for AI implementation.

2. Test A Variety of Methods and Approaches that Work for You

Moreover, methodology and approaches are also risks that need to be evaluated. Some approaches or methods require substantial modifications in order to work, especially to generate usable commercial outputs.

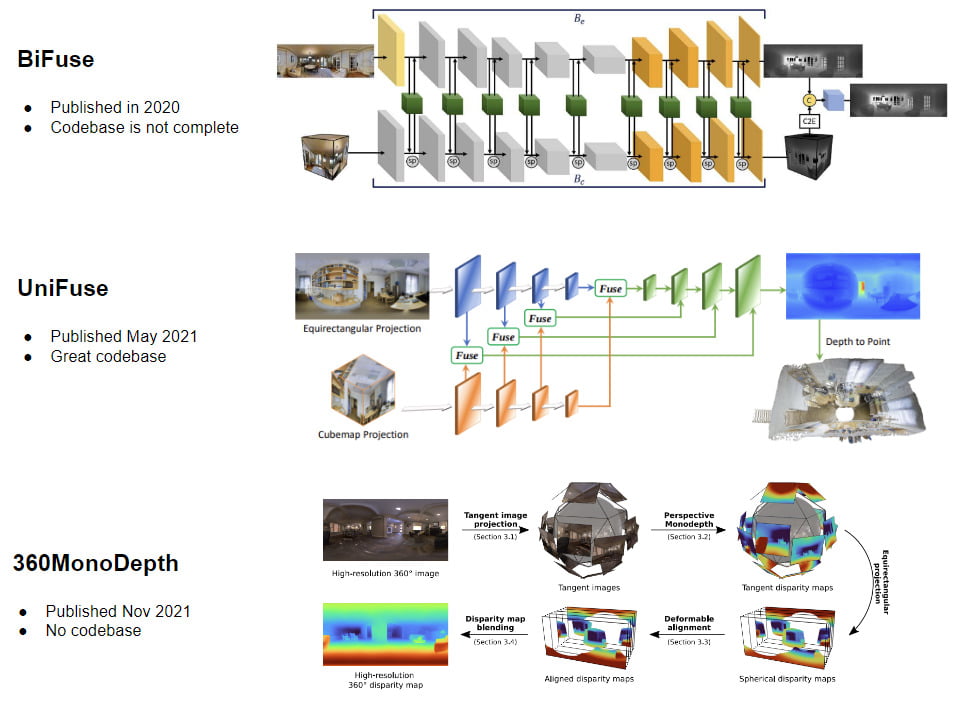

A few different models and approaches are BiFuse versus UniFuse versus 360MonoDepth. Each model has a range of expected quantitative improvements. Furthermore, models can also be pre-trained or newly developed.

So what did we actually develop? How did Gemmo use AI from images to create 3D models?

What we developed was multi-layered with our clients. We developed a way to find out why models stop learning. At the beginning of the projects, we studied in detail the problem to understand why the current bi-fuse model performed poorly on our client’s test set.

Gemmo performed root cause analysis to determine if the bi-fuse approach was well suited for resolving our clients’ use case. We found out it was not the best approach. Our goal was to take previously existing, low-quality and low-resolution images and then render them into 3D models for Virtual Tours and Virtual Staging.

We benchmarked other approaches and methodologies to create a qualitative and quantitative assessment framework that enabled us to evaluate results throughout the entire duration of the project. Simultaneously, with the help of our clients and their teams, we created a large-scale dataset that monitored the test images captured within industrial engineering spaces.

Next, we focused on the selected approach. We used the latest methods described in the paper, “BiFuse: Monocular 360° Depth Estimation via Bi-Projection Fusion” in order to reproduce the network. Some options we tried were: Matterport3D, Standford2D3D, PanoSUNCG, 360D, and BiFuse vs UniFuse vs 360MonoDepth. For transparency, here are some visual representations or our example outputs per each method.

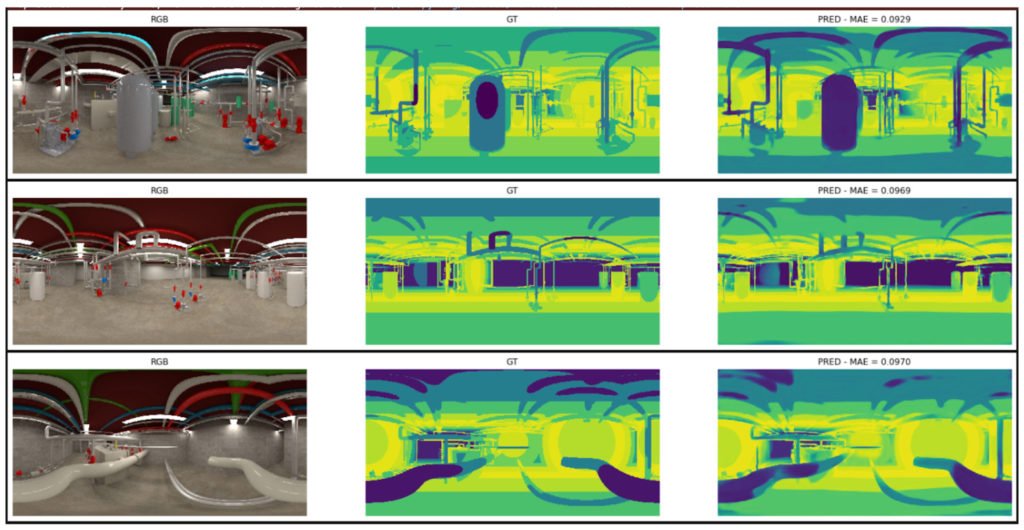

Fine-tuned Model Results:

Pre-trained Model Results:

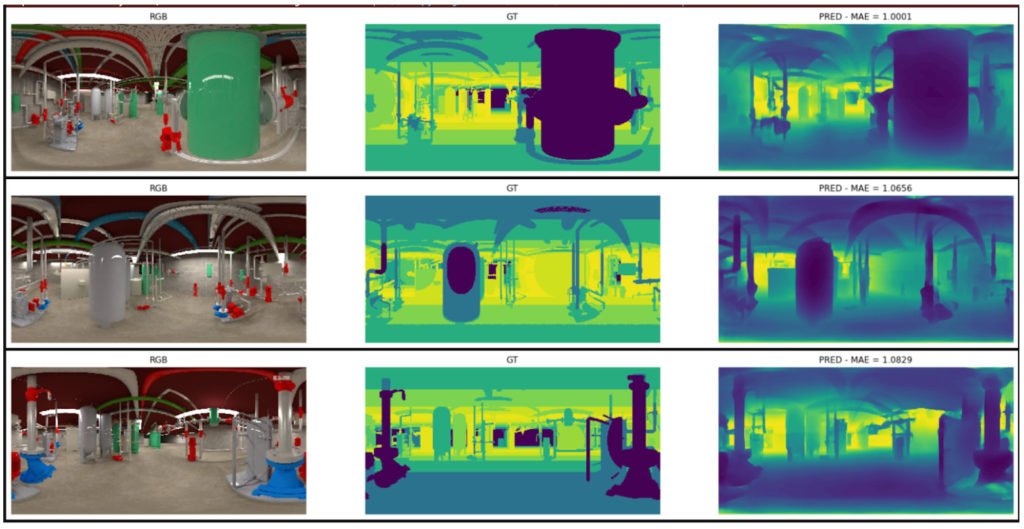

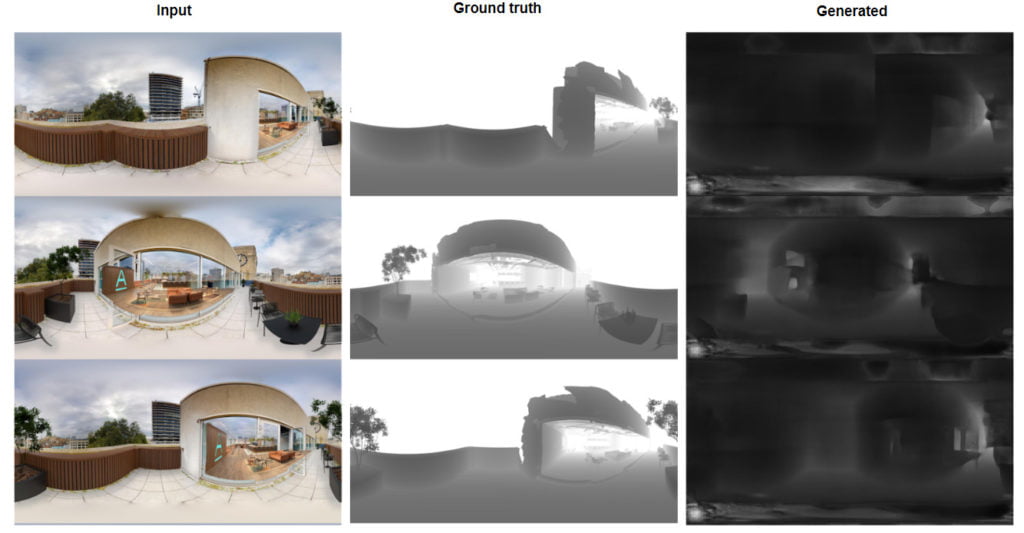

Real-world Data Results:

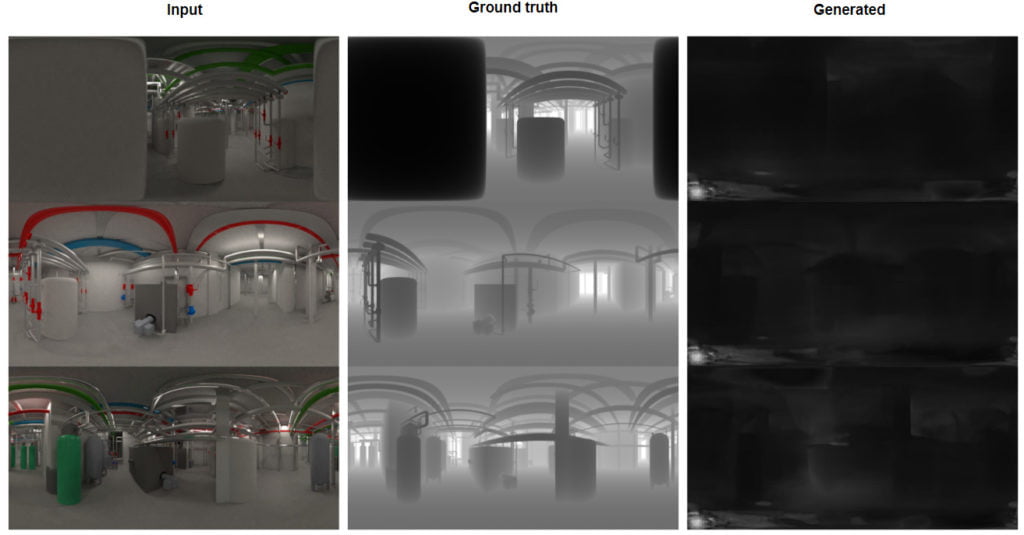

Synthetic Data Results:

Outcome And Reaching Output Goals

In the end, we retrained the model using the Matterport dataset and we made sure that the quantitative results were on par with the ones shared by the authors (of the above mentioned paper). Only at that point in time, did we train or transfer learning of the model with the augmented dataset.

We also 1) benchmarked the performance of the newly trained model on a portion of the in-house dataset that the model had never seen at training time, 2) profiled all the codes and made sure that the algorithm computational load was on par with expectation, and 3) highlighted the main sources of error and determined a roadmap to correct such errors.

The best method for our client was using UniFuse. Here are some more technical examples of the difference between BiFuse and UniFuse for those who want to go deeper.

Qualitative Comparison – A Matter of Importance

At Gemmo, we don’t just pick the model or approach that we are already familiar with and/or have developed already, instead we fine-tune a model or approach to fit the needs of the client. The matter of most importance is a qualitative comparison of each method and approach to see which one suits the needs of our clients and their goals the best.

Then, we create an AI algorithm based on that. We continually test and re-test, looking for an output of best and worst results for each model and approach in order to determine the right one that meets our client’s business output goals.

We don’t determine this alone, we communicate and build charts and graphs to show consistency and results for each model.

Our final outcome: UniFuse. Unifuse is still state of the art in qualitative comparison.

Conclusion on the Benefits of AI for Images to 3D Models

At Gemmo we wish to help small- to medium-sized businesses with their AI needs, especially those unfamiliar with or new to AI. We helped our clients transform their images into 3D models with the use of AI technology. With communication and consistency, Gemmo is able to use and build test cases that become successful outputs for our clients.

We:

- evaluate the best model and approach for them by running multiple tests on furnished, unfurnished, and PlantRoom images, correlating with MP3D and SCRATCH.

- assess if the model’s outputs are actually usable.

- conduct a qualitative comparison and opt for the best model and approach that works with our clients’ needs and overall business goals.

Let us help you with yours today.

Work with Gemmo today for your manufacturing and AI needs.

Author: Samantha Sink