Exam Invigilation AI: Remote testing with machine learning

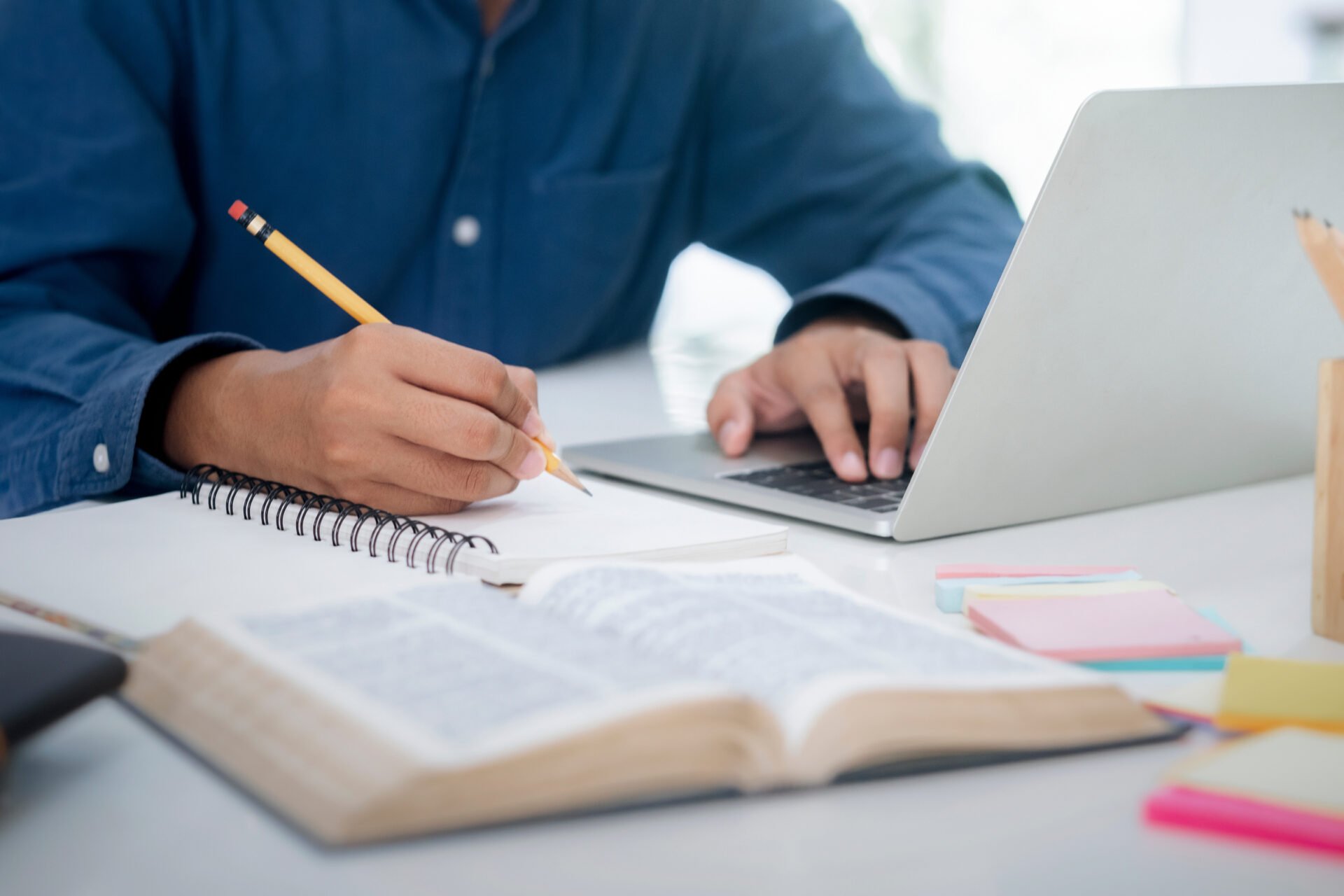

Exams have always been the cornerstone of any educational or professional certification process. However, ensuring the integrity of these assessments in a remote, digitally-driven environment poses a significant challeng...

Exams have always been the cornerstone of any educational or professional certification process. However, ensuring the integrity of these assessments in a remote, digitally-driven environment poses a significant challenge.

Not all organisations have the budget for comprehensive human supervision. Consequently, this can sometimes leads to inconsistencies and potential malpractices in remote settings.

It’s often said that necessity is the mother of invention. Faced with this challenge of ensuring exam integrity with limited human supervision, the Gemmo team embarked on an innovative R&D project.

Our primary aim? To bridge the gap between human supervision and its absence during exams with the power of Machine Learning.

Harnessing AI for High-Precision Incident Detection

The project aimed to develop a Proof of Concept for remote invigilation, utilising Machine Learning to automate incident detection and enhance supervision tasks. Following this goal, our objectives were crystal clear:

- To identify incidents that can be automatically detected with high precision.

- To design bespoke ML models that could efficiently detect these incidents.

- To establish three analysis pipelines for assisting human invigilators, thereby accelerating and scaling supervision tasks.

A Three-Pronged Approach: Prioritise, Experiment, and Evaluate

Our journey to achieving these ambitious objectives was divided into three critical phases:

1. Prioritise: Setting the Stage for Success

The first phase involved working closely with the client’s team, reviewing project KPIs, identifying key incidents to detect, and setting functional and non-functional requirements. Furthermore, the centrepiece of this phase was the creation of an in-house dataset, featuring actors simulating a variety of incidents in diverse environments.

2. Experiment: Innovating with Machine Learning

Next, we harnessed various computer vision models to extract pertinent features such as the exam environment, the examinee, and their behaviour. This enabled us to detect even the most challenging incidents indirectly, by analysing the candidate’s behaviour.

3. Evaluate: Ensuring Impact and Improvements

The final phase was focused on benchmarking the performance of the newly trained models, profiling the code, and analysing the sources of error. We delivered a comprehensive evaluation of the results to our client, along with a working prototype showcasing the groundbreaking capabilities of our approach to invigilation.

Deliverables and Achievements: A New Era of Exam Invigilation

The project’s successful completion resulted in numerous key deliverables, including:

- An in-house video dataset featuring a range of simulated exam incidents

- Machine Learning models trained for environment, candidate, and behavioural incident detection

- Comprehensive performance evaluation report

- A working prototype featuring high-accuracy incident detectors

Throughout the project, rigorous acceptance tests were conducted to ensure all milestones were met. As well as this, the tests ensured deliverables were achieved and that the models’ performance was up to the mark.

Exam Invigilation AI: Pioneering the Future of Remote Assessments

This project has ushered in a new era in exam invigilation, merging machine learning with traditional supervision to enhance scalability, speed, and accuracy. With the ‘AI in Education’ market predicted to exceed $80 billion by 2030, exam invigilation is no doubt part of this growth trajectory.

Finally, we visualise our machine-learning-based approach leading way for more cost-effective, efficient, and scalable solutions in exam supervision for the future.