Now in AI: The Truth Behind the Trends

Various AI trends have appeared in the public eye in the last number of days. Let’s take a look at some of the trends that have caught Gemmo AI‘s attention this week. The Skeptic’s Lens: Gary Marcus, the Nourie...

Various AI trends have appeared in the public eye in the last number of days. Let’s take a look at some of the trends that have caught Gemmo AI‘s attention this week.

The Skeptic’s Lens: Gary Marcus, the Nouriel Roubini of AI: Questioning AI’s Perfection

AI expert Gary Marcus has warned that machine learning could cause, “catastrophic rather than existential risk“. The cognitive scientist and bestselling author said it was unlikely that AI trends and machine learning tools, such as ChatGPT, could trigger human extinction. However, appearing on Yahoo Finance’s ‘The Crypto Mile‘, Marcus also stressed the downsides of AI tools when used by bad actors, such as creating deepfakes and other risky applications.

The scientist also addressed the letter calling for a moratorium of GPT-5, which he signed along with thousands of others: “We do need more research into making AI that we can trust, that doesn’t make stuff up.” He explained that the letter doesn’t aim to stop AI production in essence. Instead, it calls for further research to be completed before launching a new AI: “Nobody said let’s stop researching AI all together. So those of us who signed a letter said, let’s try to make AI that’s more trustworthy and reliable and doesn’t cause problems.”

Overall, Marcus explained that there is little chance machine learning could cause human extinction, and we are much more at risk of malicious actors using them illegally. According to Marcus, “I don’t think [human extinction] will happen but there could be catastrophes that come out of AI. And the fundamental problem is that the tools we’re using right now are called black boxes, which means we don’t understand what goes on inside them.”

The Economic Footprint of AI: Decoding the Costs of Large Language Models

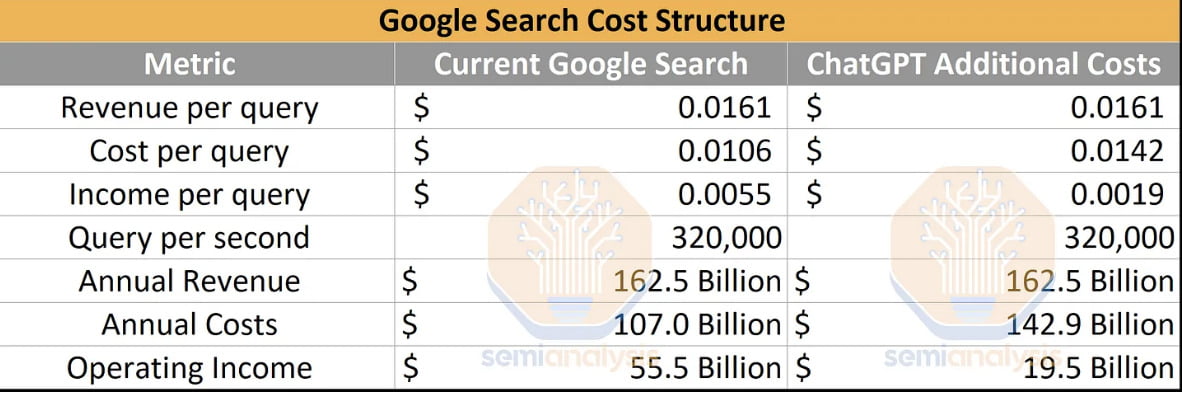

The operational intricacies of models like ChatGPT are more than just technical marvels; they’re economic behemoths. With daily compute costs reaching a staggering $694,444, the financial blueprint of AI is monumental. As OpenAI’s ChatGPT and other large language models (LLMs) weave their way into search engines, the landscape of the industry is undergoing a seismic shift.

Microsoft’s Bing, armed with this advanced technology, is poised to challenge the stronghold of giants like Google. However, the high economic stakes of LLM integration, potentially reshaping Google’s profitability, underscore the strategic manoeuvres companies must undertake in this evolving digital arena.

Credit: The Semi Analysis

When Robots Speak: The AI-Robotics Confluence: Charting the New Robotic Age

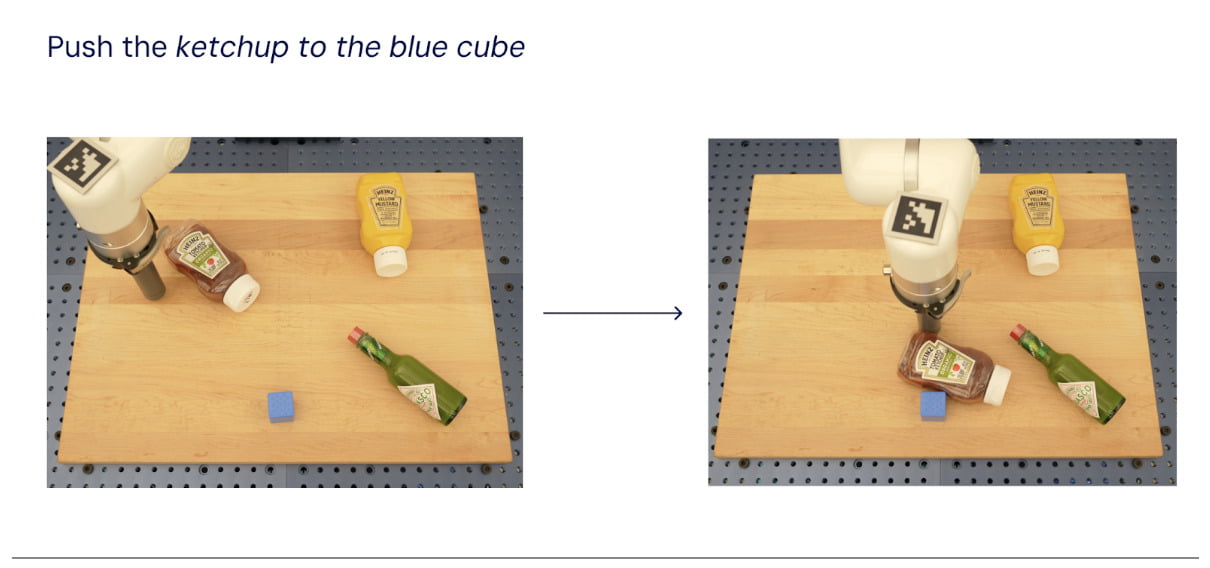

Marrying large language models with robotics isn’t just a technological feat; it’s the dawn of a new era. As robots evolve to comprehend and interact with their surroundings, we’re on the cusp of a productivity revolution. DeepMind’s Robotic Transformer 2 (RT-2) exemplifies this evolution, seamlessly integrating vision, language, and action to redefine robotic capabilities.

Building on web-scale knowledge and robotics data, RT-2 demonstrates enhanced generalisation abilities. It also paves the way for robots that can reason, problem-solve, and adapt to diverse real-world tasks.

Yet, as we celebrate these advancements, we must also ponder the societal shifts accompanying this merger. How will our roles change, and what new opportunities and challenges will emerge in this transformative landscape?

AI’s Paradoxical Progress: The Dichotomy of Hype and Adoption

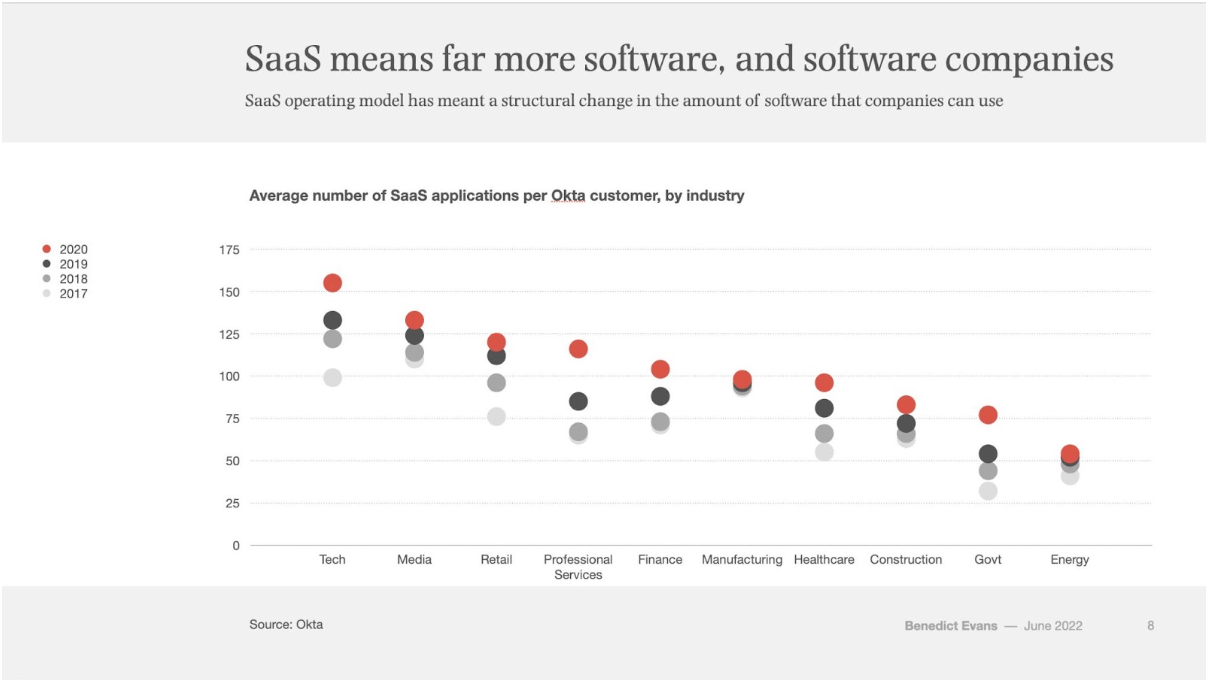

For all the headlines AI trends garner, its corporate adoption paints a more nuanced picture. While the media often portrays AI as the next big game-changer, the reality on the ground, especially in the corporate sector, tells a different story.

Taking a cue from the measured pace of SaaS integration into the corporate world, it becomes evident that AI’s integration might not be as swift or straightforward as many predict. The slow and steady adoption of SaaS, despite its clear benefits, underscores the complexities and challenges businesses face when integrating new technologies.

It’s not just about the technology’s potential but also about its alignment with business goals, the readiness of the infrastructure, and the adaptability of the workforce. This pattern serves as a poignant reminder that true innovation isn’t just about breakthroughs and buzzwords. It’s a journey that demands both enthusiasm to embrace the new and the endurance to navigate the challenges, ensuring sustainable and meaningful integration.

Credit: Benedict Evans

The New Digital Oligarchy?

(Apple, Google, Amazon, Meta, and Microsoft) In the digital arena, a few giants are staking their claim, fortified by vast data lakes, unparalleled AI expertise, and near-boundless computational prowess. Their advantage isn’t just technological; it’s strategic:

- Vast customer bases offering continuous data and insights

- Extensive data repositories that refine their algorithms.

- Profound AI development know-how.

- Boundless resources for training advanced models.

With the leverage of Large Language Models (LLMs), these titans are poised to capitalise further by deploying generative AI at an unprecedented scale. For them, LLMs enhance an already formidable foundation.

In contrast, smaller entities grapple for a foothold. As they harness AI’s potential, the question arises: In this AI-driven future, is there room for the Davids alongside the Goliaths?

The AI tapestry is rich, complex, and multi-dimensional. From critical voices to the towering presence of tech behemoths, AI’s narrative is as much about technology as it is about society, ethics, and the future we’re shaping. As we traverse this landscape, a holistic, informed, and balanced perspective remains our most valuable compass.