The Current State of Sports Analysis

This article is about the current state of human action recognition software and techniques, and how human action recognition is used in sports analysis. The aims are to discuss what improvements are available for use, w...

This article is about the current state of human action recognition software and techniques, and how human action recognition is used in sports analysis. The aims are to discuss what improvements are available for use, what sports networks, specifically SoccerNet, are currently using in regards to human action recognition, and what methods could be implemented for better sports statistics. Lastly, this article ponders what future work and research of human action recognition in sports analysis could lead to in regards to future enhancements and improvements.

Definition

Human action recognition (HAR): is the task of localizing and recognizing human-induced events in a video.

What does that mean? For sports analysis, HAR means that it recognises body movements of players and referees in order to record and learn things like gait, head tilts, kicking, running, fast-action movements, etc., for example.

Unfamiliar with Sports Analysis? Check out our in-depth articles on The Role of Artificial Intelligence in Sport Tech and Sports Analytics.

What is the goal of HAR?

To create a computer that not only sees a vision but understands what is going on inside the video and what actions have been performed by the human.

What are the applications we currently use to build HAR?

- Visual Surveillance

- We use video surveillance to help with routine traffic issues, monitoring safety of property and of others in public spaces, but more mature video surveillance can tell if someone is holding a weapon in their hand versus a cell phone. These high-tech advancements can be utilised in sports analysis too. To see the slightest, smallest detail from the tip of the ball passing the goal line, for example.

- Gestures

- By using HAR, we can recognise routine gestures that people do or give, such as the way they tilt their head, their smile or frown, their hand motions or movement, and their body language and posture.

- Medical

- In the medical field, heart pulse is a method of HAR.

- Education

- HAR can monitor attendance levels of classes

So why sports and HAR? What is lucrative or valuable about that?

Basically, sports have been monetised. Statistically, sports generate an extremely high revenue of money, so it is lucrative. The broader consumer market watches sports content; thus, it is valuable to attempt to use HAR in sports not only for the fans, who get to see replays, but also for the referees who need to watch the replays to determine the outcome of a close call. (HAR can do so much more than this!)

Sports are defined by rules, which allows for the computer to understand the game.

HAR is hard to fake in sports because of the movements of athletes; in this way, it is valuable because of the players’ movements, showing that these fast-action movements are authentic.

HAR is also easy to research, since we already film sports live and have recordings of play-by-plays. The reruns of sporting events are at our fingertips, making it easy to implement HAR as a part of sports analysis. Because of these available recordings, it is also easy to analyze an entire season in a snapshot.

Who benefits from HAR?

Automated video understanding could be beneficial to both professionals and broadcast video providers. For professionals, such as the referees, scouts, athletes and coaches, HAR allows the referees to review plays, for scouts to look for players and offer them contracts based on being able to re-watch how they kick, throw, run, etc., for the athletes and coaches in order to review footage of athletic technique and focus on ways to improve the player’s form, and these recordings are beneficial to the players by allowing them to build a portfolio of themselves to show off their skills for increase in pay as they build their careers. Allowing players to showcase their value with advanced analytics.

Problems with HAR?

- Actions may be performed:

- by different agents (i.e. players)

- at varying speed

- under diverse lighting or weather conditions

- Cameras for filming could:

- be static or dynamic

- film the actions from different points of view

- Actions are typically fast and scattered throughout the match

- partial of total occlusion

Thus, this leaves many problems related to the conditions in the field, the sensors, and the type of sport.

Just where does our HAR data reside? What are the datasets?

A few places of mention that harbor HAR sports recordings are:

- UCF Sports Dataset (ran by the BBC)

- Olympic sports

- Sports-1M

- SoccerNet-v2

We need varying datasets for the varying sports or sporting events. It is near impossible to store all sports and sporting events in one dataset; thus, there are different datasets to separate sports by category or by sporting event, such as UFC, soccer, Olympic sports, etc. A pro and con of datasets is that they are vast and full of information. Some datasets contain over 1 million videos.

Thus, datasets require sub-categories within the sport. Perhaps these sub-datasets are by date, team, tournament, player, etc. Annotations can be added to these short sport clips for easy browsing and searching by keywords.

Who can see and use these datasets?

Several datasets are available for those who wish to develop video analysis tools for sports. But only a handful are actually useful for commercial projects.

What is the general overview of the process for HAR? What is it capturing and doing?

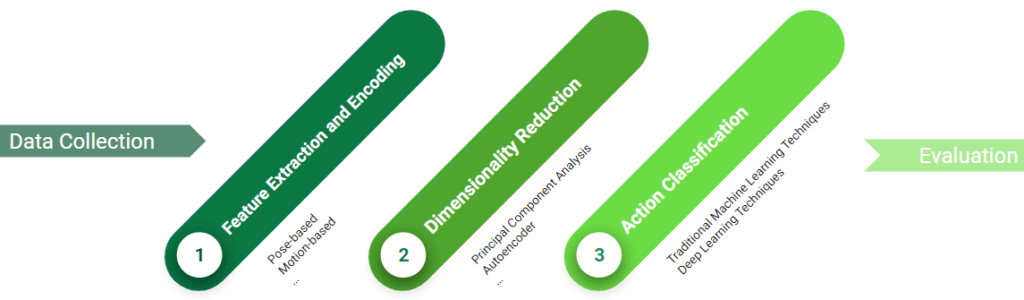

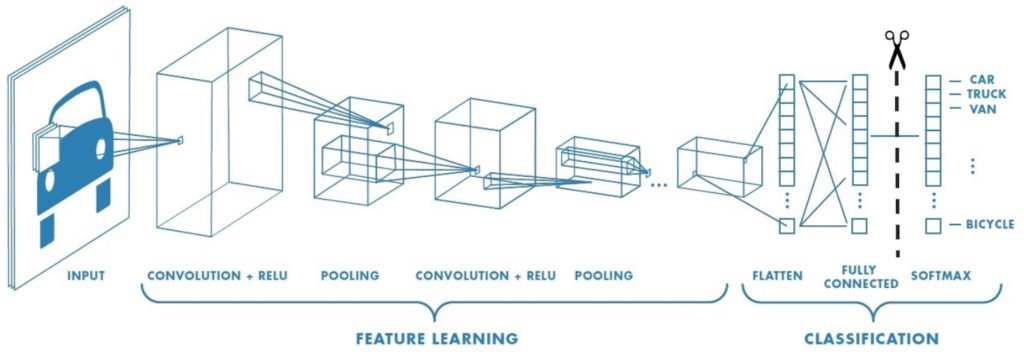

HAR has built in feature extraction and encoding processes that aim to find a meaningful representation of actions, for instance by capturing poses and motions. We call this pose-based and motion-based HAR. Since the information represented could be redundant, HAR could also include dimensionality reduction through the use of principal component analysis or autoencoders. Finally, it completes action classification, exploiting both traditional machine learning techniques and deep learning techniques.

What about time? How is time exploited?

It depends on the technique you want to use. Some techniques already take into consideration time for instance the ones that are motion-based. Indeed, they don’t consider one frame at a time, instead they take a couple of frames together to determine how the person moves over a short period of time.

What about deep approaches vs. non-deep approaches? What is the breakdown of these? Is anyone using non-deep approaches?

No one seems to be using non-deep approaches anymore. All are using deep-learning techniques because they can obtain better results at the cost of higher computational cost. We must be cognizant of storage space and RAM speed.

What does Feature Extraction do?

Feature extraction is used for generating a meaningful representation of actions that can be used in later steps. An example of feature extraction is people detection and tracking. With this technique, we can track players and add names to each person as they run around on the field, just like people see in a sports video game, such as FIFA 22 or Madden NFL21.

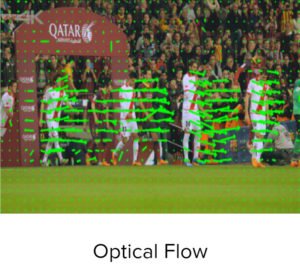

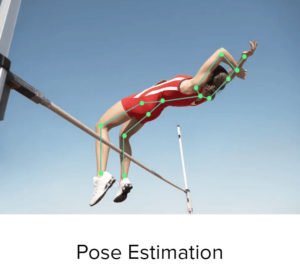

Other feature extraction techniques are optical flow and pose estimation. For example, it can capture poses and motions of Track & Field jumpers or pole-vaulters. We can look at the players more closely with fine detail, and we can look at the motion of the players. Additionally, we have the view of the cameraman, which is also interesting—because the cameraman chooses where the audience is focused, which offers a distinct point-of-view.

How do we extract features that capture information from both entities—the player and the camera?

We should be able to isolate the movement of the camera from that of the players. Cameras and players are both important sources of information! We can use CNNs for this.

What are the techniques of feature extraction within SoccerNet?

SoccerNet is a scalable dataset for action spotting in soccer videos. It uses frame features extraction, called ResNet-152. It completes principal component analysis, temporal pooling, and action classification.

SoccerNet uses a holistic approach to eliminate the problem of the temporal component. When considering a frame at a time, you completely lose the information about the time and the correlation between one frame and the other. To combat this, SoccerNet used temporal tuning to bring these frames together by using max pooling, or other advanced techniques like NetVLAD++.

They used aggregate observations over time to improve performance!

Are there any examples of methods that are extracting features and capturing temporal features in a very direct way? Are there more complex methods without this temporal pooling so that we exploit the temporal information directly instead of indirectly?

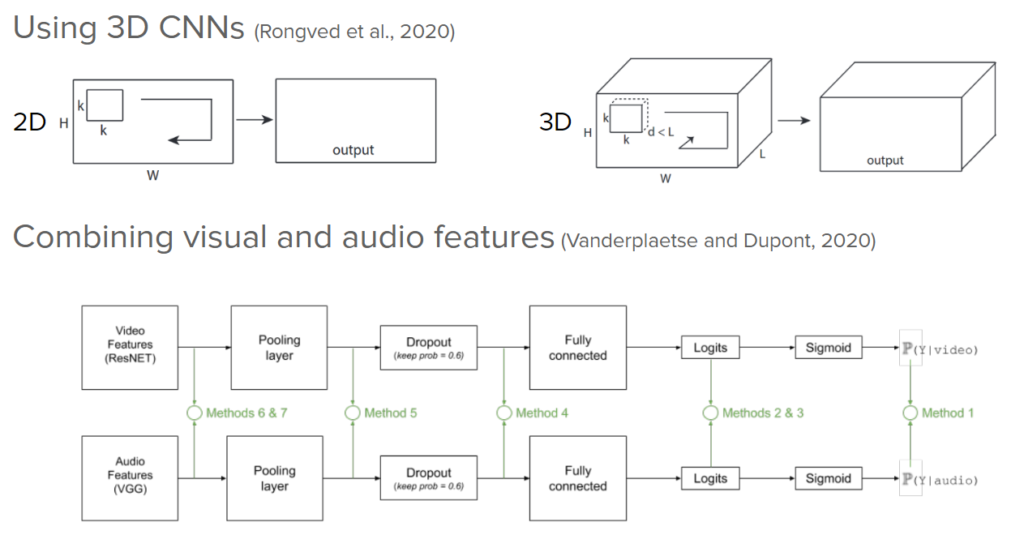

Yes. Further improvements include using 3D CNNs and combining visual and audio features. 3D CNNs can be helpful for other datasets. They are more suitable for general tasks, while other configurations are more compatible for SoccerNet.

Audio picks up cheers, chanting, and other noises and sounds that encompass a sport or sporting event. If you have it, use audio for event detection. Sonitus Systems here in Dublin, IE have noise monitoring, sound analysis, and microphones setup near Croke Park to capture sound data when a sports match is on.

What happens if there is no audio, no audience, or audio degradation has occurred? Thankfully, the video and audio features can be independent of each other.

Does temporal information improve the performance for soccer or not?

Temporal information actually doesn’t improve the performance. The temporal data is there. There is some correlation between one frame and the other for certain events.

Is temporal pooling a problem with SoccerNet or can we do something more to gather and extract information that we are doing quite right at the moment?

Temporal pooling is not a problem for SoccerNet because this is the best method for SoccerNet. But maybe the other datasets can be fine-tuned for the use of other methodologies to improve performance. This would be interesting to see if the performance could be higher with some other parameters. The future will be with RNN (Recurrent Neural Networks) and performance tests, taking into account the temporal upsets, of course.

In Conclusion

Video Analysis in AI sports tech will continue to:

- use other methods for feature extraction

- exploit the combination of several input streams

- use RNNs or Transformers

- create a generic architecture that adapts to different sports

Author: Samantha Sink